Back Propagation

- Admin

- May 28, 2021

- 1 min read

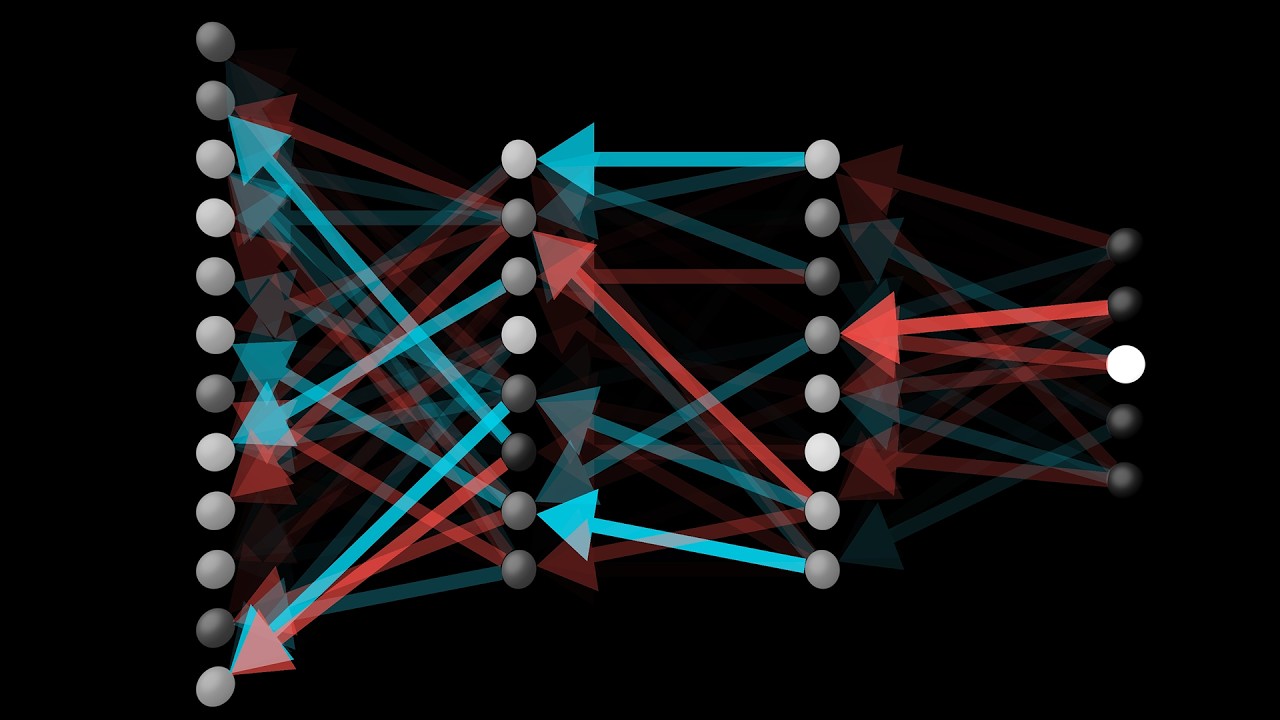

Back Propagation is a techniques used in modeling neural network to update model parameters i.e. model weights and biases to make model to perform as expected

Steps followed in Backpropagation:

Calculating Loss value for the model by using any kind of Divergence function such as L2 or KL divergence

After calculating loss value we will then take derivative of loss w.r.t model parameters i.e. weights and biases

Since a basic neural network contains Input layer, hidden layer, output layer so while we calculating loss w.r.t model parameters we have many layers in between where those layer parameters should also need to calculate

After calculating intermediate derivatives of model parameters we obtain total derivative of loss w.r.t to model parameters

Comments