Recurrent Neural Networks (RNN)s

- Admin

- Jun 18, 2021

- 3 min read

Updated: Jun 21, 2021

In Convolutional Neural Network, we have seen that for each input we have associated output. There is no relationship between present output to the past or previous input. Whereas in RNN's there is an relationship or dependency between present output to the past inputs.

In RNN's we pass the previous inputs along with current input to get current output which means there is a specific relation with past inputs while computing the current output of the model.

We use the RNN's for wide variety of applications such as :

Stock Market predictions

Natural Language Processing

Weather predictions

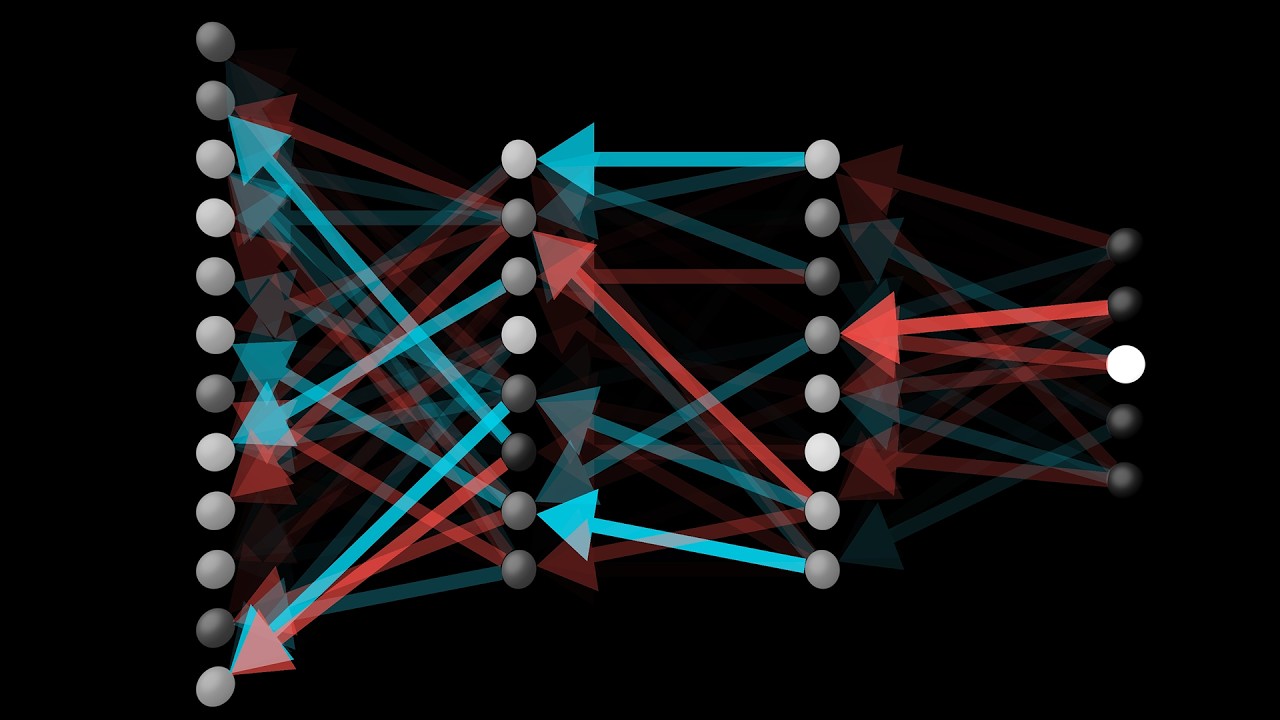

Basic structure of RNN:

In the above figure we can clearly seen that the output of first hidden state [ A ] is calculated by taking input X0 and which we passed that hidden state output to next layer along with that layer current input X1 to calculate the output of hidden state as well as output of that layer.

This process continues repeatedly until the last layer, passing each layer hidden state as input along with current layer input to calculate the current layer hidden state and output of that layer. Since there is a recurrent process while calculating the outputs of each layer that why we call this architecture as "Recurrent Neural Networks".

Disadvantages in RNN: Because of using past inputs into consideration for calculating the present layer output there are some disadvantages in RNN's. They are

Vanishing Gradient problem : While calculating the gradients for the model due to usage of huge memory or past inputs which may have very small influence on the present input for certain past inputs. when we use that gradient values to calculate the further previous layers gradients this may leads to get gradient value close to zero which causing unable to update the right model parameters such as weights and biases

RNN's are dependent on the Model Parameters (Weights and biases):The influence of the model is directly proportional to the eigen values of the model weights and biases and the influence of inputs are very less.

So we want a model architecture which have advantages over vanishing gradients and also independent of model parameters

Long Short Term Memory ( LSTM ): From the above disadvantages we clearly understand that we want a model architecture which have advantages over vanishing gradients and also independent of model parameters. That why

LSTM are comes into the picture. The LSTM's are Advanced RNN's which are independent on model parameters and also have advantages over vanishing gradients.

In order to get independent of model parameters the LSTM's use gates to filter the information passed into layer.

In general we have three types of gates in LSTM such as:

Forgot Gate : represented as "ft"

Input Gate : represented as "it"

Output Gate : represented as "ot"

As the name gate represents an object which is used as barrier to pass, in the similar way here the gates are nothing but a sigmoid activation functions which gives the values ranges from 0 to 1 when we passed the information.

In the similar fashion the "Forgot gate" is used for forgetting some memory which is very good technique in order to make the model get rid of overloaded of past unwanted information. Similarly the "Input gate" is used for inputting the information like which information should be passed into the layer for further processing . Finally the "Output gate" is used for output the information which is important for further processing.

Comments